Learning to Rank

| Name | Parth Gupta |

| IRC nick | parth |

| Timezone | UTC+5:30 |

| Work hours | 06:00-14:00 UTC |

| Primary mentor | Richard Boulton |

| Co-mentor | Whole Xapian Community! |

| Code | https://github.com/xapian/xapian/tree/svn/gsoc2011-parth |

To start with the code

> svn co svn://svn.xapian.org/xapian/branches/gsoc2011-parth xapian > cd xapian > ./bootstrap > ./configure > make

Please refer xapian-core/HACKING and xapian-core/docs/letor.rst for the general installation issues. 'letor.rst' also contains the overall documentation of the LTR project.

Let me introduce my self first. I am Parth Gupta and a masters student at DA-IICT, India with Information Retrieval major. I have recently furnished my masters thesis on Learning to Rank. I wish to extend the power of Learning to Rank to Xapian Library with the help of you people and I am excited about it!

My idea is based on the concept of Learning to Rank(Letor) which has gained immense momentum in the IR and Machine Learning community. Here we describe a query-document pair as a feature vector where features can be query dependant, document dependent ( pagerank, inLink/outLink Num, Num of children etc) and query-document dependent (TF-IDF Score, BM25 Score etc). Here we have to train our Ranking Function from the training data to assign weight accordingly to a document for a given query. You can find plenty of papers on Learning to Rank in Top-Tier conferences of IR like SIGIR and Machine Learning like ICML, CIKM, NIPS etc.

Physically the project is, With unsupervised ranking like BM25 weighting scheme, we may fetch some relevant documents but they may be very back in the rank list like 20 or 50th position, while in Learning to Rank we want to identify the characteristics of these relevant documents by the feature vector and want to rank them in top positions in the list. The idea behind is, through supervised learning we can incorporate many features and not just the BM25 score so that if BM25 pushes it back into the list, with the help of other features we can bring it on top. So the method will be first fetch 'n' documents using say BM25 scores then for them prepare feature vectors and then assign the scores to them using supervised Ranking Function.

Now precisely, I want to incorporate Support Vector Machine(SVM) based Learning to the ranking function, which will be off-line and will produce a weight vector(W) of the dimension similar to the feature vector so that when a new query comes and we have prepared a feature vector for each document for that query, we can easily assign a real value score to the document and then sort the documents in the decreasing order of the scores to produce the rank list.

- The data for the training will look something like this

0 qid:10032 1:0.130742 2:0.000000 3:0.333333 4:0.000000 ... 45:0.750000 46:1.000000 #docid = GX140-98-13566007

1 qid:10032 1:0.593640 2:1.000000 3:0.000000 4:0.000000 ... 45:0.500000 46:0.000000 #docid = GX256-43-0740276

where the first column is relevance label, and feature with their normalised values are given after that. So the learning model will learn to rank all the documents with relevance 1 above the first irrelevant document i.e. relevance label 0. Currently I will consider it as the pointwise approach like each query-document pair i.e. each row in the training data will be considered independently. So algorithm can accept the input directly. In case of Support Vector Machine We have to solve problem of the problem of min {1/2 * ||W||2 + Sum(errors)} where error means instance is miss-classified with the loss function (y - y')2 where y is the actual relevance value and y' is prediction.

Training Data

For machine learning part we would need some data which has relevance information associated for queries [Gold Standards] so from such data we may prepare data as shown above. I have thought to use data from one of the IR Evaluation forum where data is freely available.

I want to consider a subset of INEX Ad-Hoc data to prepare the training data for learning. It is the collection containing wikipedia pages and is sufficiently large. Whole data is of 2,666,190 articles [documents] and of size ~50 GB. But we would consider only a subset of it with 668,135 documents of size 11.4 GB. Queries and their relevance judgements are also available to build training data. Reason behind using this collection is, it contains documents in xml format and have information like title, body etc. Also wikipedia documents will be quite general than any domain specific dataset.

Features [1]

I will shortly introduce some potential features to be considered for learning and would be happy to have your comment on that. Basically this time we would focus on the features which are readily available and/or can easily derivable in xapian.

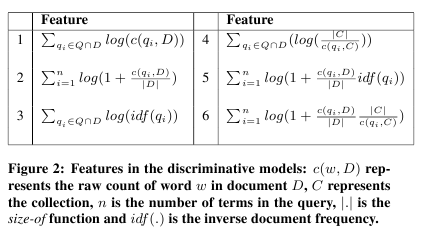

Features are shown below.

Here mentioned features are some form of functions of Term Frequency, IDF(Inverse Document Frequency) etc. These six features are defined for title only, body only and for whole document. So such 6*3=18 features will be there. These features are tested in [1] and have shown big improvement in performance. There will be one more feature, BM25 score, given for the document by Xapian bm25 weighting scheme, so there will be 18+1=19 features in total.

Learning Algorithm

I plan to use Support vector machines(SVM) for learning the ranking functions. In the figure below the hyper plane dividing two classes will be learnt through the SVM and spots represent documents for a particular query. If you consider Black spots as relevant documents and white irrelevant then for relevance, then we can say the black spot farthest from the hyper plane is highest relevant and similarly the white spot farthest from the hyperplane is most irrelevant documents and same for other docs. So we may return the ranklist in terms of documents distance from the hyperplane and its class.

Letor Framework in Xapian

References

[1] Nallapati, R. Discriminative models for information retrieval. Proceedings of SIGIR 2004 (pp. 64-71).

Attachments (2)

-

svm_demo.png

(4.1 KB

) - added by 15 years ago.

svm_demo

- features.png (33.4 KB ) - added by 15 years ago.

Download all attachments as: .zip